Russ Allbery: Review: Some Desperate Glory

| Publisher: | Tordotcom |

| Copyright: | 2023 |

| ISBN: | 1-250-83499-6 |

| Format: | Kindle |

| Pages: | 438 |

| Publisher: | Tordotcom |

| Copyright: | 2023 |

| ISBN: | 1-250-83499-6 |

| Format: | Kindle |

| Pages: | 438 |

Autocrypt: addr=anarcat@torproject.org; prefer-encrypt=nopreference;

keydata=xsFNBEogKJ4BEADHRk8dXcT3VmnEZQQdiAaNw8pmnoRG2QkoAvv42q9Ua+DRVe/yAEUd03EOXbMJl++YKWpVuzSFr7IlZ+/lJHOCqDeSsBD6LKBSx/7uH2EOIDizGwfZNF3u7X+gVBMy2V7rTClDJM1eT9QuLMfMakpZkIe2PpGE4g5zbGZixn9er+wEmzk2mt20RImMeLK3jyd6vPb1/Ph9+bTEuEXi6/WDxJ6+b5peWydKOdY1tSbkWZgdi+Bup72DLUGZATE3+Ju5+rFXtb/1/po5dZirhaSRZjZA6sQhyFM/ZhIj92mUM8JJrhkeAC0iJejn4SW8ps2NoPm0kAfVu6apgVACaNmFb4nBAb2k1KWru+UMQnV+VxDVdxhpV628Tn9+8oDg6c+dO3RCCmw+nUUPjeGU0k19S6fNIbNPRlElS31QGL4H0IazZqnE+kw6ojn4Q44h8u7iOfpeanVumtp0lJs6dE2nRw0EdAlt535iQbxHIOy2x5m9IdJ6q1wWFFQDskG+ybN2Qy7SZMQtjjOqM+CmdeAnQGVwxowSDPbHfFpYeCEb+Wzya337Jy9yJwkfa+V7e7Lkv9/OysEsV4hJrOh8YXu9a4qBWZvZHnIO7zRbz7cqVBKmdrL2iGqpEUv/x5onjNQwpjSVX5S+ZRBZTzah0w186IpXVxsU8dSk0yeQskblrwARAQABzSlBbnRvaW5lIEJlYXVwcsOpIDxhbmFyY2F0QHRvcnByb2plY3Qub3JnPsLBlAQTAQgAPgIbAwULCQgHAwUVCgkICwUWAgMBAAIeAQIXgBYhBI3JAc5kFGwEitUPu3khUlJ7dZIeBQJihnFIBQkacFLiAAoJEHkhUlJ7dZIeXNAP/RsX+27l9K5uGspEaMH6jabAFTQVWD8Ch1om9YvrBgfYtq2k/m4WlkMh9IpT89Ahmlf0eq+V1Vph4wwXBS5McK0dzoFuHXJa1WHThNMaexgHhqJOs

S60bWyLH4QnGxNaOoQvuAXiCYV4amKl7hSuDVZEn/9etDgm/UhGn2KS3yg0XFsqI7V/3RopHiDT+k7+zpAKd3st2V74w6ht+EFp2Gj0sNTBoCdbmIkRhiLyH9S4B+0Z5dUCUEopGIKKOSbQwyD5jILXEi7VTZhN0CrwIcCuqNo7OXI6e8gJd8McymqK4JrVoCipJbLzyOLxZMxGz8Ki0b9O844/DTzwcYcg9I1qogCsGmZfgVze2XtGxY+9zwSpeCLeef6QOPQ0uxsEYSfVgS+onCesSRCgwAPmppPiva+UlGuIMun87gPpQpV2fqFg/V8zBxRvs6YTGcfcQjfMoBHmZTGb+jk1//QAgnXMO7fGG38YH7iQSSzkmodrH2s27ZKgUTHVxpBL85ptftuRqbR7MzIKXZsKdA88kjIKKXwMmez9L1VbJkM4k+1Kzc5KdVydwi+ujpNegF6ZU8KDNFiN9TbDOlRxK5R+AjwdS8ZOIa4nci77KbNF9OZuO3l/FZwiKp8IFJ1nK7uiKUjmCukL0od/6X2rJtAzJmO5Co93ZVrd5r48oqUvjklzzsBNBFmeC3oBCADEV28RKzbv3dEbOocOsJQWr1R0EHUcbS270CrQZfb9VCZWkFlQ/1ypqFFQSjmmUGbNX2CG5mivVsW6Vgm7gg8HEnVCqzL02BPY4OmylskYMFI5Bra2wRNNQBgjg39L9XU4866q3BQzJp3r0fLRVH8gHM54Jf0FVmTyHotR/Xiw5YavNy2qaQXesqqUv8HBIha0rFblbuYI/cFwOtJ47gu0QmgrU0ytDjlnmDNx4rfsNylwTIHS0Oc7Pezp7MzLmZxnTM9b5VMprAXnQr4rewXCOUKBSto+j4rD5/77DzXw96bbueNruaupb2Iy2OHXNGkB0vKFD3xHsXE2x75NBovtABEBAAHCwqwEGAEIACAWIQSNyQHOZBRsBIrVD7t5IVJSe3WSHgUCWZ4LegIbAgFACRB5IV

JSe3WSHsB0IAQZAQgAHRYhBHsWQgTQlnI7AZY1qz6h3d2yYdl7BQJZngt6AAoJED6h3d2yYdl7CowH/Rp7GHEoPZTSUK8Ss7crwRmuAIDGBbSPkZbGmm4bOTaNs/gealc2tsVYpoMx7aYgqUW+t+84XciKHT+bjRv8uBnHescKZgDaomDuDKc2JVyx6samGFYuYPcGFReRcdmH0FOoPCn7bMW5mTPztV/wIA80LZD9kPKIXanfUyI3HLP0BPwZG4WTpKzJaalR1BNwu2oF6kEK0ymH3LfDiJ5Sr6emI2jrm4gH+/19ux/x+ST4tvm2PmH3BSQOPzgiqDiFd7RZoAIhmwr3FW4epsK9LtSxsi9gZ2vATBKO1oKtb6olW/keQT6uQCjqPSGojwzGRT2thEANH+5t6Vh0oDPZhrKUXRAAxHMBNHEaoo/M0sjZo+5OF3Ig1rMnI6XbKskLv6hu13cCymW0w/5E4XuYnyQ1cNC3pLvqDQbDx5mAPfBVHuqxJdRLQ3yDM/D2QIsxnkzQwi0FsJuni4vuJzWK/NHHDCvxMCh0YmSgbptUtgW8/niatd2Y6MbfRGxUHoctKtzqzivC8hKMTFrj4AbZhg/e9QVCsh5zSXtpWP0qFDJsxRMx0/432n9d4XUiy4U672r9Q09SsynB3QN6nTaCTWCIxGxjIb+8kJrRqTGwy/PElHX6kF0vQUWZNf2ITV1sd6LK/s/7sH+x4rzgUEHrsKr/qPvY3rUY/dQLd+owXesY83ANOu6oMWhSJnPMksbNa4tIKKbjmw3CFIOfoYHOWf3FtnydHNXoXfj4nBX8oSnkfhLILTJgf6JDFXfw6mTsv/jMzIfDs7PO1LK2oMK0+prSvSoM8bP9dmVEGIurzsTGjhTOBcb0zgyCmYVD3S48vZlTgHszAes1zwaCyt3/tOwrzU5JsRJVns+B/TUYaR/u3oIDMDygvE5ObWxXaFVnCC59r+zl0FazZ0ouyk2AYIR

zHf+n1n98HCngRO4FRel2yzGDYO2rLPkXRm+NHCRvUA/i4zGkJs2AV0hsKK9/x8uMkBjHAdAheXhY+CsizGzsKjjfwvgqf84LwAzSDdZqLVE2yGTOwU0ESiArJwEQAJhtnC6pScWjzvvQ6rCTGAai6hrRiN6VLVVFLIMaMnlUp92EtgVSNpw6kANtRTpKXUB5fIPZVUrVdfEN06t96/6LE42tgifDAFyFTZY5FdHHri1GG/Cr39MpW2VqCDCtTTPVWHTUlU1ZG631BJ+9NB+ce58TmLr6wBTQrT+W367eRFBC54EsLNb7zQAspCn9pw1xf1XNHOGnrAQ4r9BXhOW5B8CzRd4nLRQwVgtw/c5M/bjemAOoq2WkwN+0mfJe4TSfHwFUozXuN274X+0Gr10fhp8xEDYuQM0qu6W3aDXMBBwIu0jTNudEELsTzhKUbqpsBc9WjwNMCZoCuSw/RTpFBV35mXbqQoQgbcU7uWZslLl9Wvv/C6rjXgd+GeX8SGBjTqq1ZkTv5UXLHTNQzPnbkNEExzqToi/QdSjFMIACnakeOSxc0ckfnsd9pfGv1PUyPyiwrHiqWFzBijzGIZEHxhNGFxAkXwTJR7Pd40a7RDxwbO6p/TSIIum41JtteehLHwTRDdQNMoyfLxuNLEtNYS0uR2jYI1EPQfCNWXCdT2ZK/l6GVP6jyB/olHBIOr+oVXqJh+48ki8cATPczhq3fUr7UivmguGwD67/4omZ4PCKtz1hNndnyYFS9QldEGo+AsB3AoUpVIA0XfQVkxD9IZr+Zu6aJ6nWq4M2bsoxABEBAAHCwXYEGAEIACACGwwWIQSNyQHOZBRsBIrVD7t5IVJSe3WSHgUCWPerZAAKCRB5IVJSe3WSHkIgEACTpxdn/FKrwH0/LDpZDTKWEWm4416l13RjhSt9CUhZ/Gm2GNfXcVTfoF/jKXXgjHcV1DHjfLUPmPVwMdqlf5ACOiFqIUM2ag/OEARh356w

YG7YEobMjX0CThKe6AV2118XNzRBw/S2IO1LWnL5qaGYPZONUa9Pj0OaErdKIk/V1wge8Zoav2fQPautBcRLW5VA33PH1ggoqKQ4ES1hc9HC6SYKzTCGixu97mu/vjOa8DYgM+33TosLyNy+bCzw62zJkMf89X0tTSdaJSj5Op0SrRvfgjbC2YpJOnXxHr9qaXFbBZQhLjemZi6zRzUNeJ6A3Nzs+gIc4H7s/bYBtcd4ugPEhDeCGffdS3TppH9PnvRXfoa5zj5bsKFgjqjWolCyAmEvd15tXz5yNXtvrpgDhjF5ozPiNp/1EeWX4DxbH2i17drVu4fXwauFZ6lcsAcJxnvCA28RlQlmEQu/gFOx1axVXf6GIuXnQSjQN6qJbByUYrdc/cFCxPO2/lGuUxnufN9Tvb51Qh54laPgGLrlD2huQeSD9Sxa0MNUjNY0qLqaReT99Ygb2LPYGSLoFVx9iZz6sZNt07LqCx9qNgsJwsdmwYsNpMuFbc7nkWjtlEqzsXZHTvYN654p43S+hcAhmmOzQZcew6h71fAJLciiqsPBnCEdgCGFAWhZZdPkMA==

Autocrypt: addr=anarcat@torproject.org; prefer-encrypt=nopreference;

keydata=xjMEZHZPzhYJKwYBBAHaRw8BAQdAWdVzOFRW6FYVpeVaDo3sC4aJ2kUW4ukdEZ36UJLAHd7NKUFudG9pbmUgQmVhdXByw6kgPGFuYXJjYXRAdG9ycHJvamVjdC5vcmc+wpUEExYIAD4WIQS7ts1MmNdOE1inUqYCKTpvpOU0cwUCZHZgvwIbAwUJAeEzgAULCQgHAwUVCgkICwUWAgMBAAIeAQIXgAAKCRACKTpvpOU0c47SAPdEqfeHtFDx9UPhElZf7nSM69KyvPWXMocu9Kcu/sw1AQD5QkPzK5oxierims6/KUkIKDHdt8UcNp234V+UdD/ZB844BGR2UM4SCisGAQQBl1UBBQEBB0CYZha2IMY54WFXMG4S9/Smef54Pgon99LJ/hJ885p0ZAMBCAfCdwQYFggAIBYhBLu2zUyY104TWKdSpgIpOm+k5TRzBQJkdlDOAhsMAAoJEAIpOm+k5TRzBg0A+IbcsZhLx6FRIqBJCdfYMo7qovEo+vX0HZsUPRlq4HkBAIctCzmH3WyfOD/aUTeOF3tY+tIGUxxjQLGsNQZeGrQI

sq autocrypt decode gpg --import

Leonardo and I are happy to

announce the release of a first follow-up release 0.1.1 of our dtts package

which got to [CRAN][cran] in its initial upload last year.

dtts

builds upon our nanotime

package as well as the beloved data.table to bring

high-performance and high-resolution indexing at the

nanosecond level to data frames. dtts aims to

bring the time-series indexing versatility of xts (and zoo) to the immense

power of data.table while

supporting highest nanosecond resolution.

This release fixes a bug flagged by

Leonardo and I are happy to

announce the release of a first follow-up release 0.1.1 of our dtts package

which got to [CRAN][cran] in its initial upload last year.

dtts

builds upon our nanotime

package as well as the beloved data.table to bring

high-performance and high-resolution indexing at the

nanosecond level to data frames. dtts aims to

bring the time-series indexing versatility of xts (and zoo) to the immense

power of data.table while

supporting highest nanosecond resolution.

This release fixes a bug flagged by valgrind and brings

several internal enhancements.

Courtesy of my CRANberries, there is also a diffstat report for the this release this release. Questions, comments, issue tickets can be brought to the GitHub repo. If you like this or other open-source work I do, you can now sponsor me at GitHub.Changes in version 0.1.1 (2023-08-08)

- A simplifcation was applied to the C++ interface glue code (#9 fixing #8)

- The package no longer enforces the C++11 compilation standard (#10)

- An uninitialized memory read has been correct (#11)

- A new function

opshas been added (#12)- Function names no longer start with a dot (#13)

- Arbitrary index columns are now supported (#13)

This post by Dirk Eddelbuettel originated on his Thinking inside the box blog. Please report excessive re-aggregation in third-party for-profit settings.

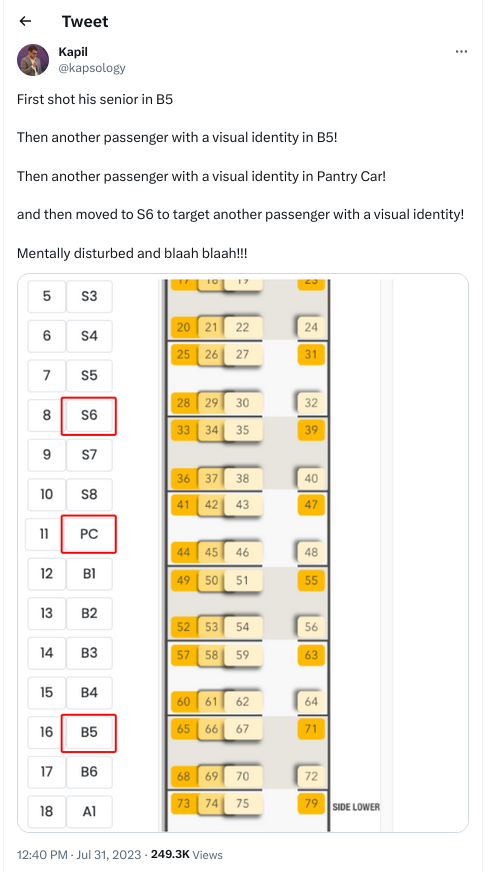

. Internet shutdowns impact women disproportionately as more videos of assaults show

. Internet shutdowns impact women disproportionately as more videos of assaults show  Of course, as shared before that gentleman has been arrested under Section 66A as I shared in the earlier blog post. In any case, in the last few years, this Government has chosen to pass most of its bills without any discussions. Some of the bills I will share below.

The attitude of this Govt. can be seen through this cartoon

Of course, as shared before that gentleman has been arrested under Section 66A as I shared in the earlier blog post. In any case, in the last few years, this Government has chosen to pass most of its bills without any discussions. Some of the bills I will share below.

The attitude of this Govt. can be seen through this cartoon

I had tried to actually delink the two but none of the banks co-operated in the same

I had tried to actually delink the two but none of the banks co-operated in the same  Aadhar has actually number of downsides, most people know about the AEPS fraud that has been committed time and time again. I have shared in previous blog posts the issue with biometric data as well as master biometric data that can and is being used for fraud. GOI either ignorant or doesn t give a fig as to what happens to you, citizen of India. I could go on and on but it would result in nothing constructive so will stop now

Aadhar has actually number of downsides, most people know about the AEPS fraud that has been committed time and time again. I have shared in previous blog posts the issue with biometric data as well as master biometric data that can and is being used for fraud. GOI either ignorant or doesn t give a fig as to what happens to you, citizen of India. I could go on and on but it would result in nothing constructive so will stop now

But that is not the whole story at all.

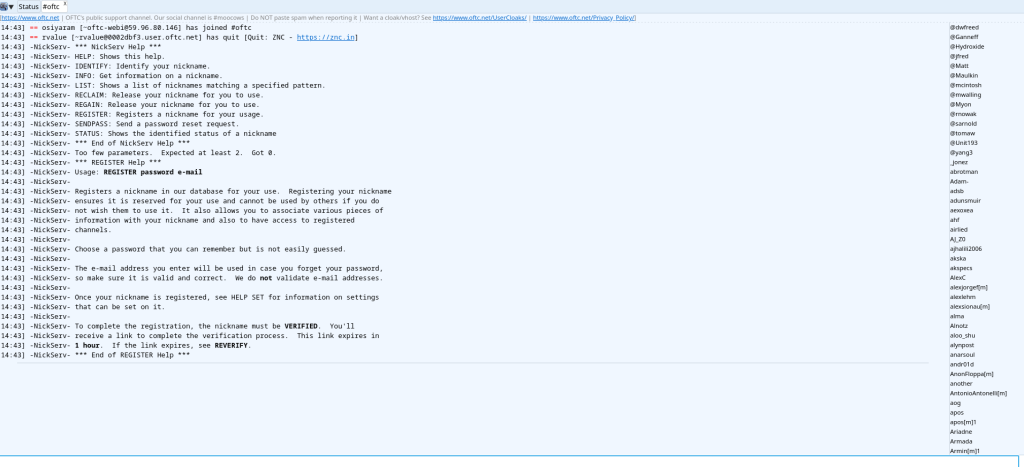

Because of Debconf happening in India, and that too Kochi, I decided to try out other tools to see how IRC is doing. While the Debian wiki page shares a lot about IRC clients and is also helpful in sharing stats by popcounter ( popularity-contest, thanks to whoever did that), it did help me in trying two of the most popular clients. Pidgin and Hexchat, both of which have shared higher numbers. This might be simply due to the fact that both get downloaded when you install the desktop version or they might be popular in themselves, have no idea one way or the other. But still I wanted to see what sort of experience I could expect from both of them in 2023. One of the other things I noticed is that Pidgin is not a participating organization in ircv3 while hexchat is. Before venturing in, I also decided to take a look at oftc.net. Came to know that for sometime now, oftc has started using web verify. I didn t see much of a difference between hcaptcha and gcaptcha other than that the fact that they looked more like oil paintings rather than anything else. While I could easily figure the odd man out or odd men out to be more accurate, I wonder how a person with low or no vision would pass that ??? Also much of our world is pretty much contextual based, figuring who the odd one is or are could be tricky. I do not have answers to the above other than to say more work needs to be done by oftc in that area. I did get a link that I verified. But am getting ahead of the story. Another thing I understood that for some reason oftc is also not particpating in ircv3, have no clue why not :(I

But that is not the whole story at all.

Because of Debconf happening in India, and that too Kochi, I decided to try out other tools to see how IRC is doing. While the Debian wiki page shares a lot about IRC clients and is also helpful in sharing stats by popcounter ( popularity-contest, thanks to whoever did that), it did help me in trying two of the most popular clients. Pidgin and Hexchat, both of which have shared higher numbers. This might be simply due to the fact that both get downloaded when you install the desktop version or they might be popular in themselves, have no idea one way or the other. But still I wanted to see what sort of experience I could expect from both of them in 2023. One of the other things I noticed is that Pidgin is not a participating organization in ircv3 while hexchat is. Before venturing in, I also decided to take a look at oftc.net. Came to know that for sometime now, oftc has started using web verify. I didn t see much of a difference between hcaptcha and gcaptcha other than that the fact that they looked more like oil paintings rather than anything else. While I could easily figure the odd man out or odd men out to be more accurate, I wonder how a person with low or no vision would pass that ??? Also much of our world is pretty much contextual based, figuring who the odd one is or are could be tricky. I do not have answers to the above other than to say more work needs to be done by oftc in that area. I did get a link that I verified. But am getting ahead of the story. Another thing I understood that for some reason oftc is also not particpating in ircv3, have no clue why not :(I

/msg nickserv help

what you are doing is asking nickserv what services they have and Nickserv shares the numbers of services it offers. After looking into, you are looking for register

/msg nickerv register

Both the commands tell you what you need to do as can be seen by this

/msg nickserv register 1234xyz;0x xyz@xyz.com

Now the thing to remember is you need to be sure that the email is valid and in your control as it would generate a link with hcaptcha. Interestingly, their accessibility signup fails or errors out. I just entered my email and it errors out. Anyway back to it. Even after completing the puzzle, even with the valid username and password neither pidgin or hexchat would let me in. Neither of the clients were helpful in figuring out what was going wrong.

At this stage, I decided to see the specs of ircv3 if they would help out in anyway and came across this. One would have thought that this is one of the more urgent things that need to be fixed, but for reasons unknown it s still in draft mode. Maybe they (the participants) are not in consensus, no idea. Unfortunately, it seems that the participants of IRCv3 have chosen a sort of closed working model as the channel is restricted. The only notes of any consequence are being shared by Ilmari Lauhakangas from Finland. Apparently, Mr/Ms/they Ilmari is also a libreoffice hacker. It is possible that their is or has been lot of drama before or something and that s why things are the way they are. In either way, doesn t tell me when this will be fixed, if ever. For people who are on mobiles and whatnot, without element, it would be 10x times harder.

Update :- Saw this discussion on github. Don t see a way out  It seems I would be unable to unable to be part of Debconf Kochi 2023. Best of luck to all the participants and please share as much as possible of what happens during the event.

It seems I would be unable to unable to be part of Debconf Kochi 2023. Best of luck to all the participants and please share as much as possible of what happens during the event.

A real sad state of affairs

A real sad state of affairs  Update: There is conditional reopening whatever that means

Update: There is conditional reopening whatever that means  When I saw the videos, the first thing is I felt was being powerless, powerless to do anything about it. The second was if I do not write about it, amplify it and don t let others know about it then what s the use of being able to blog

When I saw the videos, the first thing is I felt was being powerless, powerless to do anything about it. The second was if I do not write about it, amplify it and don t let others know about it then what s the use of being able to blog

While I felt a bit foolish, I felt it would be nice to binge on some webseries. Little I was to know that both Northshore and Alaska Daily would have stories similar to what is happening here

While I felt a bit foolish, I felt it would be nice to binge on some webseries. Little I was to know that both Northshore and Alaska Daily would have stories similar to what is happening here  While the story in Alaska Daily is fictional it resembles very closely to a real newspaper called Anchorage Daily news. Even there the Intuit women , one of the marginalized communities in Alaska. The only difference I can see between GOI and the Alaskan Government is that the Alaskan Government was much subtle in doing the same things. There are some differences though. First, the State is and was responsive to the local press and apart from one close call to one of its reporters, most reporters do not have to think about their own life in peril. Here, the press cannot look after either their livelihood or their life. It was a juvenile kid who actually shot the video, uploaded and made it viral. One needs to just remember the case details of Siddique Kappan. Just for sharing the news and the video he was arrested. Bail was denied to him time and time again citing that the Police were investigating . Only after 2 years and 3 months he got bail and that too because none of the charges that the Police had they were able to show any prima facie evidence.

One of the better interviews though was of Vrinda Grover. For those who don t know her, her Wikipedia page does tell a bit about her although it is woefully incomplete. For example, most recently she had relentlessly pursued the unconstitutional Internet Shutdown that happened in Kashmir for 5 months. Just like in Manipur, the shutdown was there to bury crimes either committed or being facilitated by the State. For the issues of livelihood, one can take the cases of Bipin Yadav and Rashid Hussain. Both were fired by their employer Dainik Bhaskar because they questioned the BJP MP Smriti Irani what she has done for the state. The problems for Dainik Bhaskar or for any other mainstream media is most of them rely on Government advertisements. Private investment in India has fallen to record lows mostly due to the policies made by the Centre. If any entity or sector grows a bit then either Adani or Ambani will one way or the other take it. So, for most first and second generation entrepreneurs it doesn t make sense to grow and then finally sell it to one of these corporates at a loss

While the story in Alaska Daily is fictional it resembles very closely to a real newspaper called Anchorage Daily news. Even there the Intuit women , one of the marginalized communities in Alaska. The only difference I can see between GOI and the Alaskan Government is that the Alaskan Government was much subtle in doing the same things. There are some differences though. First, the State is and was responsive to the local press and apart from one close call to one of its reporters, most reporters do not have to think about their own life in peril. Here, the press cannot look after either their livelihood or their life. It was a juvenile kid who actually shot the video, uploaded and made it viral. One needs to just remember the case details of Siddique Kappan. Just for sharing the news and the video he was arrested. Bail was denied to him time and time again citing that the Police were investigating . Only after 2 years and 3 months he got bail and that too because none of the charges that the Police had they were able to show any prima facie evidence.

One of the better interviews though was of Vrinda Grover. For those who don t know her, her Wikipedia page does tell a bit about her although it is woefully incomplete. For example, most recently she had relentlessly pursued the unconstitutional Internet Shutdown that happened in Kashmir for 5 months. Just like in Manipur, the shutdown was there to bury crimes either committed or being facilitated by the State. For the issues of livelihood, one can take the cases of Bipin Yadav and Rashid Hussain. Both were fired by their employer Dainik Bhaskar because they questioned the BJP MP Smriti Irani what she has done for the state. The problems for Dainik Bhaskar or for any other mainstream media is most of them rely on Government advertisements. Private investment in India has fallen to record lows mostly due to the policies made by the Centre. If any entity or sector grows a bit then either Adani or Ambani will one way or the other take it. So, for most first and second generation entrepreneurs it doesn t make sense to grow and then finally sell it to one of these corporates at a loss  GOI on Adani, Ambani side of any deal. The MSME sector that is and used to be the second highest employer hasn t been able to recover from the shocks of demonetization, GST and then the pandemic. Each resulting in more and more closures and shutdowns. Most of the joblessness has gone up tremendously in North India which the Government tries to deny.

The most interesting points in all those above examples is within a month or less, whatever the media reports gets scrubbed. Even the firing of the journos that was covered by some of the mainstream media isn t there anymore. I have to use secondary sources instead of primary sources. One can think of the chilling effects on reportage due to the above. The sad fact is even with all the money in the world the PM is unable to come to the Parliament to face questions.

GOI on Adani, Ambani side of any deal. The MSME sector that is and used to be the second highest employer hasn t been able to recover from the shocks of demonetization, GST and then the pandemic. Each resulting in more and more closures and shutdowns. Most of the joblessness has gone up tremendously in North India which the Government tries to deny.

The most interesting points in all those above examples is within a month or less, whatever the media reports gets scrubbed. Even the firing of the journos that was covered by some of the mainstream media isn t there anymore. I have to use secondary sources instead of primary sources. One can think of the chilling effects on reportage due to the above. The sad fact is even with all the money in the world the PM is unable to come to the Parliament to face questions.

Even the media landscape has been altered substantially within the last few years. Both Adani and Ambani have distributed the media pie between themselves. One of the last bastions of the free press, NDTV was bought by Adani in a hostile takeover. Both Ambani and Adani are close to this Goverment. In fact, there is no sector in which one or the other is not present. Media houses like Newsclick, The Wire etc. that are a fraction of mainstream press are where most of the youth have been going to get their news as they are not partisan. Although even there, GOI has time and again interfered. The Wire has had too many 504 Gateway timeouts in the recent months and they had been forced to move most of their journalism from online to video, rather Youtube in order to escape both the censoring and the timeouts as shared above. In such a hostile environment, how both the organizations are somehow able to survive is a miracle. Most local reportage is also going to YouTube as that s the best way for them to not get into Govt. censors. Not an ideal situation, but that s the way it is.

The difference between Indian and Israeli media can be seen through this

Even the media landscape has been altered substantially within the last few years. Both Adani and Ambani have distributed the media pie between themselves. One of the last bastions of the free press, NDTV was bought by Adani in a hostile takeover. Both Ambani and Adani are close to this Goverment. In fact, there is no sector in which one or the other is not present. Media houses like Newsclick, The Wire etc. that are a fraction of mainstream press are where most of the youth have been going to get their news as they are not partisan. Although even there, GOI has time and again interfered. The Wire has had too many 504 Gateway timeouts in the recent months and they had been forced to move most of their journalism from online to video, rather Youtube in order to escape both the censoring and the timeouts as shared above. In such a hostile environment, how both the organizations are somehow able to survive is a miracle. Most local reportage is also going to YouTube as that s the best way for them to not get into Govt. censors. Not an ideal situation, but that s the way it is.

The difference between Indian and Israeli media can be seen through this

Like each month, have a look at the work funded by Freexian s Debian LTS offering.

Like each month, have a look at the work funded by Freexian s Debian LTS offering.

exec bwrap --ro-bind $(pwd) $(pwd) --ro-bind /usr /usr --symlink usr/lib64 /lib64 --symlink usr/lib /lib --proc /proc --dev /dev --unshare-pid --unshare-net --die-with-parent ./chatHere are some examples of it s work. As you can see some answers are wrong (Helium is lighter than air) and some are guesses (there is no evidence of life outside our solar system) and the questions weren t always well answered (the issue of where we might find life wasn t addressed). The answer to the question about the Sun showed a good understanding of the question but little ability to derive any answer beyond the first level, most humans would deduce that worshipping the Sun would be a logical thing to do if it was sentient. Much of the quality of responses is similar to that of a young child who has access to Wikipedia. > tell me about dinosaurs

| Series: | Sun Chronicles #2 |

| Publisher: | Tor |

| Copyright: | 2023 |

| ISBN: | 1-250-86701-0 |

| Format: | Kindle |

| Pages: | 725 |

Crossing the ocean of stars we leave our home behind us.This is not great poetry, but it explains so much about the psychology of the characters. Sun repeatedly describes herself and her allies as spears cast at the furious heaven. Her mother's life mission was to make Chaonia a respected independent power. Hers is much more than that, reaching back into myth for stories of impossible leaps into space, burning brightly against the hostile power of the universe itself. A question about a series like this is why one should want to read about a gender-swapped Alexander the Great in space, rather than just reading about Alexander himself. One good (and sufficient) answer is that both the gender swap and the space parts are inherently interesting. But the other place that Elliott uses the science fiction background is to give Sun motives beyond sheer personal ambition. At a critical moment in the story, just like Alexander, Sun takes a detour to consult an Oracle. Because this is a science fiction novel, it's a great SF set piece involving a mysterious AI. But also because this is a science fiction story, Sun doesn't only ask about her personal ambitions. I won't spoil the exact questions; I think the moment is better not knowing what she'll ask. But they're science fiction questions, reader questions, the kinds of things Elliott has been building curiosity about for a book and a half by the time we reach that scene. Half the fun of reading a good epic space opera is learning the mysteries hidden in the layers of world-building. Aligning the goals of the protagonist with the goals of the reader is a simple storytelling trick, but oh, so effective. Structurally, this is not that great of a book. There's a lot of build-up and only some payoff, and there were several bits I found grating. But I am thoroughly invested in this universe now. The third book can't come soon enough. Followed by Lady Chaos, which is still being written at the time of this review. Rating: 7 out of 10

We are the spears cast at the furious heaven

And we will burn one by one into ashes

As with the last sparks we vanish.

This memory we carry to our own death which awaits us

And from which none of us will return.

Do not forget. Goodbye forever.

apt-get install zram-tools

# Compression algorithm selection

# Speed: lz4 > zstd > lzo

# Compression: zstd > lzo > lz4

# This is not inclusive of all the algorithms available in the latest kernels

# See /sys/block/zram0/comp_algorithm (when the zram module is loaded) to check

# the currently set and available algorithms for your kernel [1]

# [1] https://github.com/torvalds/linux/blob/master/Documentation/blockdev/zram.txt#L86

ALGO=zstd

# Specifies the amount of RAM that should be used for zram

# based on a percentage of the total available memory

# This takes precedence and overrides SIZE below

PERCENT=30

# Specifies a static amount of RAM that should be used for

# the ZRAM devices, measured in MiB

# SIZE=256000

# Specifies the priority for the swap devices, see swapon(2)

# for more details. A higher number indicates higher priority

# This should probably be higher than hdd/ssd swaps.

# PRIORITY=100

systemctl restart zramswap.service

# This config file enables a /dev/zram0 swap device with the following

# properties:

# * size: 50% of available RAM or 4GiB, whichever is less

# * compression-algorithm: kernel default

#

# This device's properties can be modified by adding options under the

# [zram0] section below. For example, to set a fixed size of 2GiB, set

# zram-size = 2GiB .

[zram0]

zram-size = ceil(ram * 30/100)

compression-algorithm = zstd

swap-priority = 100

fs-type = swap

systemctl daemon-reload

systemctl start systemd-zram-setup@zram0.service

free -tg (Mon,Jun19)

total used free shared buff/cache available

Mem: 31 12 11 3 11 18

Swap: 10 2 8

Total: 41 14 19

sudo stress-ng --vm=1 --vm-bytes=50G -t 120 (Mon,Jun19)

stress-ng: info: [1496310] setting to a 120 second (2 mins, 0.00 secs) run per stressor

stress-ng: info: [1496310] dispatching hogs: 1 vm

stress-ng: info: [1496312] vm: gave up trying to mmap, no available memory, skipping stressor

stress-ng: warn: [1496310] vm: [1496311] aborted early, out of system resources

stress-ng: info: [1496310] vm:

stress-ng: warn: [1496310] 14 System Management Interrupts

stress-ng: info: [1496310] passed: 0

stress-ng: info: [1496310] failed: 0

stress-ng: info: [1496310] skipped: 1: vm (1)

stress-ng: info: [1496310] successful run completed in 10.04s

[Note: the original version of this post named the author of the referenced blog post, and the tone of my writing could be construed to be mocking or otherwise belittling them.

While that was not my intention, I recognise that was a possible interpretation, and I have revised this post to remove identifying information and try to neutralise the tone.

On the other hand, I have kept the identifying details of the domain involved, as there are entirely legitimate security concerns that result from the issues discussed in this post.]

I have spoken before about why it is tricky to redact private keys.

Although that post demonstrated a real-world, presumably-used-in-the-wild private key, I ve been made aware of commentary along the lines of this representative sample:

[Note: the original version of this post named the author of the referenced blog post, and the tone of my writing could be construed to be mocking or otherwise belittling them.

While that was not my intention, I recognise that was a possible interpretation, and I have revised this post to remove identifying information and try to neutralise the tone.

On the other hand, I have kept the identifying details of the domain involved, as there are entirely legitimate security concerns that result from the issues discussed in this post.]

I have spoken before about why it is tricky to redact private keys.

Although that post demonstrated a real-world, presumably-used-in-the-wild private key, I ve been made aware of commentary along the lines of this representative sample:

I find it hard to believe that anyone would take their actual production key and redact it for documentation. Does the author have evidence of this in practice, or did they see example keys and assume they were redacted production keys?Well, buckle up, because today s post is another real-world case study, with rather higher stakes than the previous example.

xs.

Based on the steps I explained previously, it is relatively straightforward to retrieve the entire, intact private key.

72bef096997ec59a671d540d75bd1926363b2097eb9fe10220b2654b1f665b54

Searching for certificates which use that key fingerprint, we find one result: a certificate for hiltonhotels.jp (and a bunch of other, related, domains, as subjectAltNames).

As of the time of writing, that certificate is not marked as revoked, and appears to be the same certificate that is currently presented to visitors of that site.

This is, shall we say, not great.

Anyone in possession of this private key which, I should emphasise, has presumably been public information since the post s publication date of February 2023 has the ability to completely transparently impersonate the sites listed in that certificate.

That would provide an attacker with the ability to capture any data a user entered, such as personal information, passwords, or payment details, and also modify what the user s browser received, including injecting malware or other unpleasantness.

In short, no good deed goes unpunished, and this attempt to educate the world at large about the benefits of secure key storage has instead published private key material.

Remember, kids: friends don t let friends post redacted private keys to the Internet.

I m calling time on DNSSEC. Last week, prompted by a change in my DNS hosting setup, I began removing it from the few personal zones I had signed. Then this Monday the .nz ccTLD experienced a multi-day availability incident triggered by the annual DNSSEC key rotation process. This incident broke several of my unsigned zones, which led me to say very unkind things about DNSSEC on Mastodon and now I feel compelled to more completely explain my thinking:

For almost all domains and use-cases, the costs and risks of deploying DNSSEC outweigh the benefits it provides. Don t bother signing your zones.

The .nz incident, while topical, is not the motivation or the trigger for this conclusion. Had it been a novel incident, it would still have been annoying, but novel incidents are how we learn so I have a small tolerance for them. The problem with DNSSEC is precisely that this incident was not novel, just the latest in a long and growing list.

It s a clear pattern. DNSSEC is complex and risky to deploy. Choosing to sign your zone will almost inevitably mean that you will experience lower availability for your domain over time than if you leave it unsigned. Even if you have a team of DNS experts maintaining your zone and DNS infrastructure, the risk of routine operational tasks triggering a loss of availability (unrelated to any attempted attacks that DNSSEC may thwart) is very high - almost guaranteed to occur. Worse, because of the nature of DNS and DNSSEC these incidents will tend to be prolonged and out of your control to remediate in a timely fashion.

The only benefit you get in return for accepting this almost certain reduction in availability is trust in the integrity of the DNS data a subset of your users (those who validate DNSSEC) receive. Trusted DNS data that is then used to communicate across an untrusted network layer. An untrusted network layer which you are almost certainly protecting with TLS which provides a more comprehensive and trustworthy set of security guarantees than DNSSEC is capable of, and provides those guarantees to all your users regardless of whether they are validating DNSSEC or not.

In summary, in our modern world where TLS is ubiquitous, DNSSEC provides only a thin layer of redundant protection on top of the comprehensive guarantees provided by TLS, but adds significant operational complexity, cost and a high likelihood of lowered availability.

In an ideal world, where the deployment cost of DNSSEC and the risk of DNSSEC-induced outages were both low, it would absolutely be desirable to have that redundancy in our layers of protection. In the real world, given the DNSSEC protocol we have today, the choice to avoid its complexity and rely on TLS alone is not at all painful or risky to make as the operator of an online service. In fact, it s the prudent choice that will result in better overall security outcomes for your users.

Ignore DNSSEC and invest the time and resources you would have spent deploying it improving your TLS key and certificate management.

Ironically, the one use-case where I think a valid counter-argument for this position can be made is TLDs (including ccTLDs such as .nz). Despite its many failings, DNSSEC is an Internet Standard, and as infrastructure providers, TLDs have an obligation to enable its use. Unfortunately this means that everyone has to bear the costs, complexities and availability risks that DNSSEC burdens these operators with. We can t avoid that fact, but we can avoid creating further costs, complexities and risks by choosing not to deploy DNSSEC on the rest of our non-TLD zones.

I m calling time on DNSSEC. Last week, prompted by a change in my DNS hosting setup, I began removing it from the few personal zones I had signed. Then this Monday the .nz ccTLD experienced a multi-day availability incident triggered by the annual DNSSEC key rotation process. This incident broke several of my unsigned zones, which led me to say very unkind things about DNSSEC on Mastodon and now I feel compelled to more completely explain my thinking:

For almost all domains and use-cases, the costs and risks of deploying DNSSEC outweigh the benefits it provides. Don t bother signing your zones.

The .nz incident, while topical, is not the motivation or the trigger for this conclusion. Had it been a novel incident, it would still have been annoying, but novel incidents are how we learn so I have a small tolerance for them. The problem with DNSSEC is precisely that this incident was not novel, just the latest in a long and growing list.

It s a clear pattern. DNSSEC is complex and risky to deploy. Choosing to sign your zone will almost inevitably mean that you will experience lower availability for your domain over time than if you leave it unsigned. Even if you have a team of DNS experts maintaining your zone and DNS infrastructure, the risk of routine operational tasks triggering a loss of availability (unrelated to any attempted attacks that DNSSEC may thwart) is very high - almost guaranteed to occur. Worse, because of the nature of DNS and DNSSEC these incidents will tend to be prolonged and out of your control to remediate in a timely fashion.

The only benefit you get in return for accepting this almost certain reduction in availability is trust in the integrity of the DNS data a subset of your users (those who validate DNSSEC) receive. Trusted DNS data that is then used to communicate across an untrusted network layer. An untrusted network layer which you are almost certainly protecting with TLS which provides a more comprehensive and trustworthy set of security guarantees than DNSSEC is capable of, and provides those guarantees to all your users regardless of whether they are validating DNSSEC or not.

In summary, in our modern world where TLS is ubiquitous, DNSSEC provides only a thin layer of redundant protection on top of the comprehensive guarantees provided by TLS, but adds significant operational complexity, cost and a high likelihood of lowered availability.

In an ideal world, where the deployment cost of DNSSEC and the risk of DNSSEC-induced outages were both low, it would absolutely be desirable to have that redundancy in our layers of protection. In the real world, given the DNSSEC protocol we have today, the choice to avoid its complexity and rely on TLS alone is not at all painful or risky to make as the operator of an online service. In fact, it s the prudent choice that will result in better overall security outcomes for your users.

Ignore DNSSEC and invest the time and resources you would have spent deploying it improving your TLS key and certificate management.

Ironically, the one use-case where I think a valid counter-argument for this position can be made is TLDs (including ccTLDs such as .nz). Despite its many failings, DNSSEC is an Internet Standard, and as infrastructure providers, TLDs have an obligation to enable its use. Unfortunately this means that everyone has to bear the costs, complexities and availability risks that DNSSEC burdens these operators with. We can t avoid that fact, but we can avoid creating further costs, complexities and risks by choosing not to deploy DNSSEC on the rest of our non-TLD zones.

| Series: | Discworld #29 |

| Publisher: | Harper |

| Copyright: | November 2002 |

| Printing: | August 2014 |

| ISBN: | 0-06-230740-1 |

| Format: | Mass market |

| Pages: | 451 |

Keep the peace. That was the thing. People often failed to understand what that meant. You'd go to some life-threatening disturbance like a couple of neighbors scrapping in the street over who owned the hedge between their properties, and they'd both be bursting with aggrieved self-righteousness, both yelling, their wives would either be having a private scrap on the side or would have adjourned to a kitchen for a shared pot of tea and a chat, and they all expected you to sort it out. And they could never understand that it wasn't your job. Sorting it out was a job for a good surveyor and a couple of lawyers, maybe. Your job was to quell the impulse to bang their stupid fat heads together, to ignore the affronted speeches of dodgy self-justification, to get them to stop shouting and to get them off the street. Once that had been achieved, your job was over. You weren't some walking god, dispensing finely tuned natural justice. Your job was simply to bring back peace.When Vimes is thrown back in time, he has to pick up the role of his own mentor, the person who taught him what policing should be like. His younger self is right there, watching everything he does, and he's desperately afraid he'll screw it up and set a worse example. Make history worse when he's trying to make it better. It's a beautifully well-done bit of tension that uses time travel as the hook to show both how difficult mentorship is and also how irritating one's earlier naive self would be.

He wondered if it was at all possible to give this idiot some lessons in basic politics. That was always the dream, wasn't it? "I wish I'd known then what I know now"? But when you got older you found out that you now wasn't you then. You then was a twerp. You then was what you had to be to start out on the rocky road of becoming you now, and one of the rocky patches on that road was being a twerp.The backdrop of this story, as advertised by the map at the front of the book, is a revolution of sorts. And the revolution does matter, but not in the obvious way. It creates space and circumstance for some other things to happen that are all about the abuse of policing as a tool of politics rather than Vimes's principle of keeping the peace. I mentioned when reviewing Men at Arms that it was an awkward book to read in the United States in 2020. This book tackles the ethics of policing head-on, in exactly the way that book didn't. It's also a marvelous bit of competence porn. Somehow over the years, Vimes has become extremely good at what he does, and not just in the obvious cop-walking-a-beat sort of ways. He's become a leader. It's not something he thinks about, even when thrown back in time, but it's something Pratchett can show the reader directly, and have the other characters in the book comment on. There is so much more that I'd like to say, but so much would be spoilers, and I think Night Watch is more effective when you have the suspense of slowly puzzling out what's going to happen. Pratchett's pacing is exquisite. It's also one of the rare Discworld novels where Pratchett fully commits to a point of view and lets Vimes tell the story. There are a few interludes with other people, but the only other significant protagonist is, quite fittingly, Vetinari. I won't say anything more about that except to note that the relationship between Vimes and Vetinari is one of the best bits of fascinating subtlety in all of Discworld. I think it's also telling that nothing about Night Watch reads as parody. Sure, there is a nod to Back to the Future in the lightning storm, and it's impossible to write a book about police and street revolutions without making the reader think about Les Miserables, but nothing about this plot matches either of those stories. This is Pratchett telling his own story in his own world, unapologetically, and without trying to wedge it into parody shape, and it is so much the better book for it. The one quibble I have with the book is that the bits with the Time Monks don't really work. Lu-Tze is annoying and flippant given the emotional stakes of this story, the interludes with him are frustrating and out of step with the rest of the book, and the time travel hand-waving doesn't add much. I see structurally why Pratchett put this in: it gives Vimes (and the reader) a time frame and a deadline, it establishes some of the ground rules and stakes, and it provides a couple of important opportunities for exposition so that the reader doesn't get lost. But it's not good story. The rest of the book is so amazingly good, though, that it doesn't matter (and the framing stories for "what if?" explorations almost never make much sense). The other thing I have a bit of a quibble with is outside the book. Night Watch, as you may have guessed by now, is the origin of the May 25th Pratchett memes that you will be familiar with if you've spent much time around SFF fandom. But this book is dramatically different from what I was expecting based on the memes. You will, for example see a lot of people posting "Truth, Justice, Freedom, Reasonably Priced Love, And a Hard-Boiled Egg!", and before reading the book it sounds like a Pratchett-style humorous revolutionary slogan. And I guess it is, sort of, but, well... I have to quote the scene:

"You'd like Freedom, Truth, and Justice, wouldn't you, Comrade Sergeant?" said Reg encouragingly. "I'd like a hard-boiled egg," said Vimes, shaking the match out. There was some nervous laughter, but Reg looked offended. "In the circumstances, Sergeant, I think we should set our sights a little higher " "Well, yes, we could," said Vimes, coming down the steps. He glanced at the sheets of papers in front of Reg. The man cared. He really did. And he was serious. He really was. "But...well, Reg, tomorrow the sun will come up again, and I'm pretty sure that whatever happens we won't have found Freedom, and there won't be a whole lot of Justice, and I'm damn sure we won't have found Truth. But it's just possible that I might get a hard-boiled egg."I think I'm feeling defensive of the heart of this book because it's such an emotional gut punch and says such complicated and nuanced things about politics and ethics (and such deeply cynical things about revolution). But I think if I were to try to represent this story in a meme, it would be the "angels rise up" song, with all the layers of meaning that it gains in this story. I'm still at the point where the lilac sprigs remind me of Sergeant Colon becoming quietly furious at the overstep of someone who wasn't there. There's one other thing I want to say about that scene: I'm not naturally on Vimes's side of this argument. I think it's important to note that Vimes's attitude throughout this book is profoundly, deeply conservative. The hard-boiled egg captures that perfectly: it's a bit of physical comfort, something you can buy or make, something that's part of the day-to-day wheels of the city that Vimes talks about elsewhere in Night Watch. It's a rejection of revolution, something that Vimes does elsewhere far more explicitly. Vimes is a cop. He is in some profound sense a defender of the status quo. He doesn't believe things are going to fundamentally change, and it's not clear he would want them to if they did. And yet. And yet, this is where Pratchett's Dickensian morality comes out. Vimes is a conservative at heart. He's grumpy and cynical and jaded and he doesn't like change. But if you put him in a situation where people are being hurt, he will break every rule and twist every principle to stop it.

He wanted to go home. He wanted it so much that he trembled at the thought. But if the price of that was selling good men to the night, if the price was filling those graves, if the price was not fighting with every trick he knew... then it was too high. It wasn't a decision that he was making, he knew. It was happening far below the areas of the brain that made decisions. It was something built in. There was no universe, anywhere, where a Sam Vimes would give in on this, because if he did then he wouldn't be Sam Vimes any more.This is truly exceptional stuff. It is the best Discworld novel I have read, by far. I feel like this was the Watch novel that Pratchett was always trying to write, and he had to write five other novels first to figure out how to write it. And maybe to prepare Discworld readers to read it. There are a lot of Discworld novels that are great on their own merits, but also it is 100% worth reading all the Watch novels just so that you can read this book. Followed in publication order by The Wee Free Men and later, thematically, by Thud!. Rating: 10 out of 10

That is just awesome, nothing to see here, go look at the BPF documents if you have cgroup v2. With cgroup v1 if you wanted to know what devices were permitted, you just wouldCgroup v2 device controller has no interface files and is implemented on top of cgroup BPF.https://www.kernel.org/doc/Documentation/admin-guide/cgroup-v2.rst

cat /sys/fs/cgroup/XX/devices.allow and you were done!

The kernel documentation is not very helpful, sure its something in BPF and has something to do with the cgroup BPF specifically, but what does that mean?

There doesn t seem to be an easy corresponding method to get the same information. So to see what restrictions a docker container has, we will have to:

docker ps command, you get the short id. To get the long id you can either use the --no-trunc flag or just guess from the short ID. I usually do the second.

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a3c53d8aaec2 debian:minicom "/bin/bash" 19 minutes ago Up 19 minutes inspiring_shannon

/sys/fs/cgroup/system.slice/docker-a3c53d8aaec23c256124f03d208732484714219c8b5f90dc1c3b4ab00f0b7779.scope/ Notice that the last directory has docker- then the short ID.

If you re not sure of the exact path. The /sys/fs/cgroup is the cgroup v2 mount point which can be found with mount -t cgroup2 and then rest is the actual cgroup name. If you know the process running in the container then the cgroup column in ps will show you.

$ ps -o pid,comm,cgroup 140064

PID COMMAND CGROUP

140064 bash 0::/system.slice/docker-a3c53d8aaec23c256124f03d208732484714219c8b5f90dc1c3b4ab00f0b7779.scope$ sudo bpftool cgroup list /sys/fs/cgroup/system.slice/docker-a3c53d8aaec23c256124f03d208732484714219c8b5f90dc1c3b4ab00f0b7779.scope/

ID AttachType AttachFlags Name

90 cgroup_device multisudo bpftool prog dump xlated id 90 > myebpf.txt0: (61) r2 = *(u32 *)(r1 +0) 1: (54) w2 &= 65535 2: (61) r3 = *(u32 *)(r1 +0) 3: (74) w3 >>= 16 4: (61) r4 = *(u32 *)(r1 +4) 5: (61) r5 = *(u32 *)(r1 +8)What we find is that once we get past the first few lines filtering the given value that the comparison lines have:

63: (55) if r2 != 0x2 goto pc+4 64: (55) if r4 != 0x64 goto pc+3 65: (55) if r5 != 0x2a goto pc+2 66: (b4) w0 = 1 67: (95) exitThis is a container using the option

--device-cgroup-rule='c 100:42 rwm'. It is checking if r2 (device type) is 2 (char) and r4 (major device number) is 0x64 or 100 and r5 (minor device number) is 0x2a or 42. If any of those are not true, move to the next section, otherwise return with 1 (permit). We have all access modes permitted so it doesn t check for it.

The previous example has all permissions for our device with id 100:42, what about if we only want write access with the option --device-cgroup-rule='c 100:42 r'. The resulting eBPF is:

63: (55) if r2 != 0x2 goto pc+7 64: (bc) w1 = w3 65: (54) w1 &= 2 66: (5d) if r1 != r3 goto pc+4 67: (55) if r4 != 0x64 goto pc+3 68: (55) if r5 != 0x2a goto pc+2 69: (b4) w0 = 1 70: (95) exitThe code is almost the same but we are checking that w3 only has the second bit set, which is for reading, effectively checking for X==X&2. It s a cautious approach meaning no access still passes but multiple bits set will fail.

--device flag. This flag actually does two things. The first is to great the device file in the containers /dev directory, effectively doing a mknod command. The second thing is to adjust the eBPF program. If the device file we specified actually did have a major number of 100 and a minor of 42, the eBPF would look exactly like the above snippets.

--privileged flag do? This lets the container have full access to all the devices (if the user running the process is allowed). Like the --device flag, it makes the device files as well, but what does the filtering look like? We still have a cgroup but the eBPF program is greatly simplified, here it is in full:

0: (61) r2 = *(u32 *)(r1 +0) 1: (54) w2 &= 65535 2: (61) r3 = *(u32 *)(r1 +0) 3: (74) w3 >>= 16 4: (61) r4 = *(u32 *)(r1 +4) 5: (61) r5 = *(u32 *)(r1 +8) 6: (b4) w0 = 1 7: (95) exitThere is the usual setup lines and then, return 1. Everyone is a winner for all devices and access types!

Hello folks!

We recently received a case letting us know that Dataproc 2.1.1 was unable to write to a BigQuery table with a column of type JSON. Although the BigQuery connector for Spark has had support for JSON columns since 0.28.0, the Dataproc images on the 2.1 line still cannot create tables with JSON columns or write to existing tables with JSON columns.

The customer has graciously granted permission to share the code we developed to allow this operation. So if you are interested in working with JSON column tables on Dataproc 2.1 please continue reading!

Use the following gcloud command to create your single-node dataproc cluster:

Hello folks!

We recently received a case letting us know that Dataproc 2.1.1 was unable to write to a BigQuery table with a column of type JSON. Although the BigQuery connector for Spark has had support for JSON columns since 0.28.0, the Dataproc images on the 2.1 line still cannot create tables with JSON columns or write to existing tables with JSON columns.

The customer has graciously granted permission to share the code we developed to allow this operation. So if you are interested in working with JSON column tables on Dataproc 2.1 please continue reading!

Use the following gcloud command to create your single-node dataproc cluster:

IMAGE_VERSION=2.1.1-debian11

REGION=us-west1

ZONE=$ REGION -a

CLUSTER_NAME=pick-a-cluster-name

gcloud dataproc clusters create $ CLUSTER_NAME \

--region $ REGION \

--zone $ ZONE \

--single-node \

--master-machine-type n1-standard-4 \

--master-boot-disk-type pd-ssd \

--master-boot-disk-size 50 \

--image-version $ IMAGE_VERSION \

--max-idle=90m \

--enable-component-gateway \

--scopes 'https://www.googleapis.com/auth/cloud-platform'

The following file is the Scala code used to write JSON structured data to a BigQuery table using Spark. The file following this one can be executed from your single-node Dataproc cluster.

Main.scala

import org.apache.spark.sql.functions.col

import org.apache.spark.sql.types. Metadata, StringType, StructField, StructType

import org.apache.spark.sql. Row, SaveMode, SparkSession

import org.apache.spark.sql.avro

import org.apache.avro.specific

val env = "x"

val my_bucket = "cjac-docker-on-yarn"

val my_table = "dataset.testavro2"

val spark = env match

case "local" =>

SparkSession

.builder()

.config("temporaryGcsBucket", my_bucket)

.master("local")

.appName("isssue_115574")

.getOrCreate()

case _ =>

SparkSession

.builder()

.config("temporaryGcsBucket", my_bucket)

.appName("isssue_115574")

.getOrCreate()

// create DF with some data

val someData = Seq(

Row(""" "name":"name1", "age": 10 """, "id1"),

Row(""" "name":"name2", "age": 20 """, "id2")

)

val schema = StructType(

Seq(

StructField("user_age", StringType, true),

StructField("id", StringType, true)

)

)

val avroFileName = s"gs://$ my_bucket /issue_115574/someData.avro"

val someDF = spark.createDataFrame(spark.sparkContext.parallelize(someData), schema)

someDF.write.format("avro").mode("overwrite").save(avroFileName)

val avroDF = spark.read.format("avro").load(avroFileName)

// set metadata

val dfJSON = avroDF

.withColumn("user_age_no_metadata", col("user_age"))

.withMetadata("user_age", Metadata.fromJson(""" "sqlType":"JSON" """))

dfJSON.show()

dfJSON.printSchema

// write to BigQuery

dfJSON.write.format("bigquery")

.mode(SaveMode.Overwrite)

.option("writeMethod", "indirect")

.option("intermediateFormat", "avro")

.option("useAvroLogicalTypes", "true")

.option("table", my_table)

.save()

repro.sh:

#!/bin/bash PROJECT_ID=set-yours-here DATASET_NAME=dataset TABLE_NAME=testavro2 # We have to remove all of the existing spark bigquery jars from the local # filesystem, as we will be using the symbols from the # spark-3.3-bigquery-0.30.0.jar below. Having existing jar files on the # local filesystem will result in those symbols having higher precedence # than the one loaded with the spark-shell. sudo find /usr -name 'spark*bigquery*jar' -delete # Remove the table from the bigquery dataset if it exists bq rm -f -t $PROJECT_ID:$DATASET_NAME.$TABLE_NAME # Create the table with a JSON type column bq mk --table $PROJECT_ID:$DATASET_NAME.$TABLE_NAME \ user_age:JSON,id:STRING,user_age_no_metadata:STRING # Load the example Main.scala spark-shell -i Main.scala \ --jars /usr/lib/spark/external/spark-avro.jar,gs://spark-lib/bigquery/spark-3.3-bigquery-0.30.0.jar # Show the table schema when we use bq mk --table and then load the avro bq query --use_legacy_sql=false \ "SELECT ddl FROM $DATASET_NAME.INFORMATION_SCHEMA.TABLES where table_name='$TABLE_NAME'" # Remove the table so that we can see that the table is created should it not exist bq rm -f -t $PROJECT_ID:$DATASET_NAME.$TABLE_NAME # Dynamically generate a DataFrame, store it to avro, load that avro, # and write the avro to BigQuery, creating the table if it does not already exist spark-shell -i Main.scala \ --jars /usr/lib/spark/external/spark-avro.jar,gs://spark-lib/bigquery/spark-3.3-bigquery-0.30.0.jar # Show that the table schema does not differ from one created with a bq mk --table bq query --use_legacy_sql=false \ "SELECT ddl FROM $DATASET_NAME.INFORMATION_SCHEMA.TABLES where table_name='$TABLE_NAME'"Google BigQuery has supported JSON data since October of 2022, but until now, it has not been possible, on generally available Dataproc clusters, to interact with these columns using the Spark BigQuery Connector. JSON column type support was introduced in spark-bigquery-connector release 0.28.0.

AMD Issues

It s just been couple of hard weeks apparently for AMD. The first has been the TPM (Trusted Platform Module) issue that was shown by couple of security researchers. From what is known, apparently with $200 worth of tools and with sometime you can hack into somebody machine if you have physical access. Ironically, MS made a huge show about TPM and also made it sort of a requirement if a person wanted to have Windows 11. I remember Matthew Garett sharing about TPM and issues with Lenovo laptops. While AMD has acknowledged the issue, its response has been somewhat wishy-washy. But this is not the only issue that has been plaguing AMD. There have been reports of AMD chips literally exploding and again AMD issuing a somewhat wishy-washy response.  Asus though made some changes but is it for Zen4 or only 5 parts, not known. Most people are expecting a recession in I.T. hardware this year as well as next year due to high prices. No idea if things will change, if ever

Asus though made some changes but is it for Zen4 or only 5 parts, not known. Most people are expecting a recession in I.T. hardware this year as well as next year due to high prices. No idea if things will change, if ever

I do try not to report because right now every other day we see somewhere or the other the Govt. curtailing our rights and most people are mute

I do try not to report because right now every other day we see somewhere or the other the Govt. curtailing our rights and most people are mute  Signing out, till later

Signing out, till later

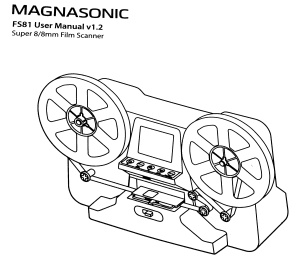

After my father passed away, I brought home most of the personal items

he had, both at home and at his office. Among many, many (many, many,

many) other things, I brought two of his personal treasures: His photo

collection and a box with the 8mm movies he shot approximately between

1956 and 1989, when he was forced into modernity and got a portable

videocassette recorder.

I have talked with several friends, as I really want to get it all in

a digital format, and while I ve been making slow but steady advances

scanning the photo reels, I was particularly dismayed (even though it

was most expected most personal electronic devices aren t meant to

last over 50 years) to find out the 8mm projector was no longer in

working conditions; the lamp and the fans work, but the spindles won t

spin. Of course, it is quite likely it is easy to fix, but it is

beyond my tinkering abilities and finding photographic equipment

repair shops is no longer easy. Anyway, even if I got it fixed,

filming a movie from a screen, even with a decent camera, is a lousy

way to get it digitized.

But almost by mere chance, I got in contact with my cousin Daniel, ho

came to Mexico to visit his parents, and had precisely brought with

him a 8mm/Super8 movie scanner! It is a much simpler piece of

equipment than I had expected, and while it does present some minor

glitches (i.e. the vertical framing slightly loses alignment over the

course of a medium-length film scanning session, and no adjustment is

possible while the scan is ongoing), this is something

that can be decently fixed in post-processing, and a scanning session

can be split with no ill effects. Anyway, it is quite uncommon a

mid-length (5min) film can be done without interrupting i.e. to join a

splice, mostly given my father didn t just film, but also edited a lot

(this is, it s not just family pictures, but all different kinds of

fiction and documentary work he did).

After my father passed away, I brought home most of the personal items

he had, both at home and at his office. Among many, many (many, many,

many) other things, I brought two of his personal treasures: His photo

collection and a box with the 8mm movies he shot approximately between

1956 and 1989, when he was forced into modernity and got a portable

videocassette recorder.

I have talked with several friends, as I really want to get it all in

a digital format, and while I ve been making slow but steady advances

scanning the photo reels, I was particularly dismayed (even though it

was most expected most personal electronic devices aren t meant to

last over 50 years) to find out the 8mm projector was no longer in

working conditions; the lamp and the fans work, but the spindles won t

spin. Of course, it is quite likely it is easy to fix, but it is

beyond my tinkering abilities and finding photographic equipment

repair shops is no longer easy. Anyway, even if I got it fixed,

filming a movie from a screen, even with a decent camera, is a lousy

way to get it digitized.

But almost by mere chance, I got in contact with my cousin Daniel, ho

came to Mexico to visit his parents, and had precisely brought with

him a 8mm/Super8 movie scanner! It is a much simpler piece of

equipment than I had expected, and while it does present some minor

glitches (i.e. the vertical framing slightly loses alignment over the

course of a medium-length film scanning session, and no adjustment is

possible while the scan is ongoing), this is something

that can be decently fixed in post-processing, and a scanning session

can be split with no ill effects. Anyway, it is quite uncommon a

mid-length (5min) film can be done without interrupting i.e. to join a

splice, mostly given my father didn t just film, but also edited a lot

(this is, it s not just family pictures, but all different kinds of

fiction and documentary work he did).

So, Daniel lent me a great, brand new, entry-level film scanner; I

rushed to scan as many movies as possible before his return to the USA

this week, but he insisted he bought it to help preserve our family s

memory, and given we are still several cousins living in Mexico, I

could keep hold of it so any other of the cousins will find it more

easily. Of course, I am thankful and delighted!

So, this equipment is a Magnasonic FS81. It is entry-level, as it

lacks some adjustment abilities a professional one would surely have,

and I m sure a better scanner will make the job faster but it s

infinitely superior to not having it!

The scanner processes roughly two frames per second (while the nominal

8mm/Super8 speed is 24 frames per second), so a 3 minute film reel

takes a bit over 35 minutes And a long, ~20 minute film reel

takes Close to 4hr, if nothing gets in your way :- And yes, with

longer reels, the probability of a splice breaking are way higher than

with a short one not only because there is simply a longer film to

process, but also because, both at the unwinding and at the receiving

reels, mechanics play their roles.

The films don t advance smoothly, but jump to position each frame in

the scanner s screen, so every bit of film gets its fair share of

gentle tugs.

My professional consultant on how and what to do is my good friend

Chema

Serralde,

who has stopped me from doing several things I would regret later

otherwise (such as joining spliced tapes with acidic chemical

adhesives such as Kola Loka, a.k.a. Krazy Glue even if it s a

bit trickier to do it, he insisted me on best using simple transparent

tape if I m not buying fancy things such as film-adhesive). Chema also

explained me the importance of the loopers (las Lupes in his

technical Spanish translation), which I feared increased the

likelihood of breaking a bit of old glue due to the angle in which the

film gets pulled but if skipped, result in films with too much

jumping.

So, Daniel lent me a great, brand new, entry-level film scanner; I

rushed to scan as many movies as possible before his return to the USA

this week, but he insisted he bought it to help preserve our family s

memory, and given we are still several cousins living in Mexico, I

could keep hold of it so any other of the cousins will find it more

easily. Of course, I am thankful and delighted!

So, this equipment is a Magnasonic FS81. It is entry-level, as it

lacks some adjustment abilities a professional one would surely have,

and I m sure a better scanner will make the job faster but it s

infinitely superior to not having it!

The scanner processes roughly two frames per second (while the nominal

8mm/Super8 speed is 24 frames per second), so a 3 minute film reel

takes a bit over 35 minutes And a long, ~20 minute film reel

takes Close to 4hr, if nothing gets in your way :- And yes, with

longer reels, the probability of a splice breaking are way higher than

with a short one not only because there is simply a longer film to

process, but also because, both at the unwinding and at the receiving

reels, mechanics play their roles.

The films don t advance smoothly, but jump to position each frame in

the scanner s screen, so every bit of film gets its fair share of

gentle tugs.

My professional consultant on how and what to do is my good friend

Chema

Serralde,

who has stopped me from doing several things I would regret later

otherwise (such as joining spliced tapes with acidic chemical

adhesives such as Kola Loka, a.k.a. Krazy Glue even if it s a

bit trickier to do it, he insisted me on best using simple transparent

tape if I m not buying fancy things such as film-adhesive). Chema also

explained me the importance of the loopers (las Lupes in his

technical Spanish translation), which I feared increased the

likelihood of breaking a bit of old glue due to the angle in which the

film gets pulled but if skipped, result in films with too much

jumping.

Not all of the movies I have are for public sharing Some of them are

just family movies, with high personal value, but probably of very

little interest to others. But some are! I have been uploading some of

the movies, after minor post-processing, to the Internet

Archive. Among them:

Anyway, I have a long way forward for scanning. I have 20 3min reels,

19 5min reels, and 8 20min reels. I want to check the scanning

quality, but I think my 20min reels are mostly processed (we paid for

scanning them some years ago). I mostly finished the 3min reels, but

might have to go over some of them again due to the learning process.

And Well, I m having quite a bit of fun in the process!

Not all of the movies I have are for public sharing Some of them are

just family movies, with high personal value, but probably of very

little interest to others. But some are! I have been uploading some of

the movies, after minor post-processing, to the Internet

Archive. Among them:

Anyway, I have a long way forward for scanning. I have 20 3min reels,

19 5min reels, and 8 20min reels. I want to check the scanning

quality, but I think my 20min reels are mostly processed (we paid for

scanning them some years ago). I mostly finished the 3min reels, but

might have to go over some of them again due to the learning process.

And Well, I m having quite a bit of fun in the process!

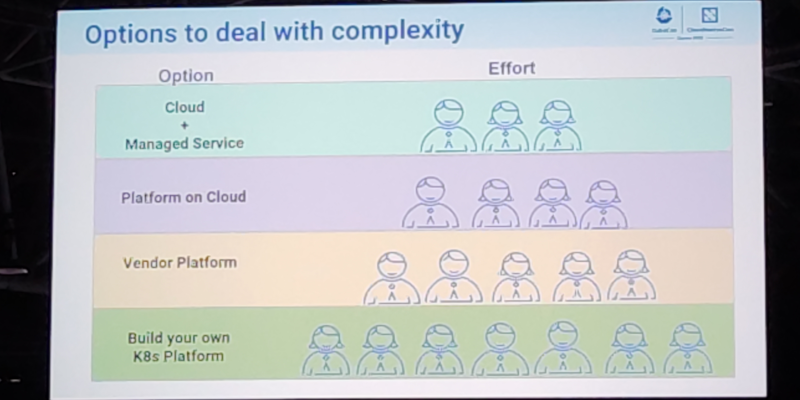

This post serves as a report from my attendance to Kubecon and CloudNativeCon 2023 Europe that took place in

Amsterdam in April 2023. It was my second time physically attending this conference, the first one was in

Austin, Texas (USA) in 2017. I also attended once in a virtual fashion.

The content here is mostly generated for the sake of my own recollection and learnings, and is written from

the notes I took during the event.

The very first session was the opening keynote, which reunited the whole crowd to bootstrap the event and

share the excitement about the days ahead. Some astonishing numbers were announced: there were more than

10.000 people attending, and apparently it could confidently be said that it was the largest open source

technology conference taking place in Europe in recent times.

It was also communicated that the next couple iteration of the event will be run in China in September 2023

and Paris in March 2024.

More numbers, the CNCF was hosting about 159 projects, involving 1300 maintainers and about 200.000

contributors. The cloud-native community is ever-increasing, and there seems to be a strong trend in the

industry for cloud-native technology adoption and all-things related to PaaS and IaaS.

The event program had different tracks, and in each one there was an interesting mix of low-level and higher

level talks for a variety of audience. On many occasions I found that reading the talk title alone was not

enough to know in advance if a talk was a 101 kind of thing or for experienced engineers. But unlike in

previous editions, I didn t have the feeling that the purpose of the conference was to try selling me

anything. Obviously, speakers would make sure to mention, or highlight in a subtle way, the involvement of a

given company in a given solution or piece of the ecosystem. But it was non-invasive and fair enough for me.

On a different note, I found the breakout rooms to be often small. I think there were only a couple of rooms

that could accommodate more than 500 people, which is a fairly small allowance for 10k attendees. I realized

with frustration that the more interesting talks were immediately fully booked, with people waiting in line

some 45 minutes before the session time. Because of this, I missed a few important sessions that I ll

hopefully watch online later.

Finally, on a more technical side, I ve learned many things, that instead of grouping by session I ll group

by topic, given how some subjects were mentioned in several talks.

On gitops and CI/CD pipelines

Most of the mentions went to FluxCD and ArgoCD. At

that point there were no doubts that gitops was a mature approach and both flux and argoCD could do an

excellent job. ArgoCD seemed a bit more over-engineered to be a more general purpose CD pipeline, and flux

felt a bit more tailored for simpler gitops setups. I discovered that both have nice web user interfaces that

I wasn t previously familiar with.

However, in two different talks I got the impression that the initial setup of them was simple, but migrating

your current workflow to gitops could result in a bumpy ride. This is, the challenge is not deploying

flux/argo itself, but moving everything into a state that both humans and flux/argo can understand. I also

saw some curious mentions to the config drifts that can happen in some cases, even if the goal of gitops is

precisely for that to never happen. Such mentions were usually accompanied by some hints on how to operate

the situation by hand.

Worth mentioning, I missed any practical information about one of the key pieces to this whole gitops story:

building container images. Most of the showcased scenarios were using pre-built container images, so in that

sense they were simple. Building and pushing to an image registry is one of the two key points we would need

to solve in Toolforge Kubernetes if adopting gitops.

In general, even if gitops were already in our radar for

Toolforge Kubernetes,

I think it climbed a few steps in my priority list after the conference.

Another learning was this site: https://opengitops.dev/.

This post serves as a report from my attendance to Kubecon and CloudNativeCon 2023 Europe that took place in

Amsterdam in April 2023. It was my second time physically attending this conference, the first one was in

Austin, Texas (USA) in 2017. I also attended once in a virtual fashion.

The content here is mostly generated for the sake of my own recollection and learnings, and is written from

the notes I took during the event.

The very first session was the opening keynote, which reunited the whole crowd to bootstrap the event and

share the excitement about the days ahead. Some astonishing numbers were announced: there were more than

10.000 people attending, and apparently it could confidently be said that it was the largest open source

technology conference taking place in Europe in recent times.

It was also communicated that the next couple iteration of the event will be run in China in September 2023

and Paris in March 2024.

More numbers, the CNCF was hosting about 159 projects, involving 1300 maintainers and about 200.000

contributors. The cloud-native community is ever-increasing, and there seems to be a strong trend in the

industry for cloud-native technology adoption and all-things related to PaaS and IaaS.

The event program had different tracks, and in each one there was an interesting mix of low-level and higher

level talks for a variety of audience. On many occasions I found that reading the talk title alone was not

enough to know in advance if a talk was a 101 kind of thing or for experienced engineers. But unlike in

previous editions, I didn t have the feeling that the purpose of the conference was to try selling me

anything. Obviously, speakers would make sure to mention, or highlight in a subtle way, the involvement of a

given company in a given solution or piece of the ecosystem. But it was non-invasive and fair enough for me.

On a different note, I found the breakout rooms to be often small. I think there were only a couple of rooms

that could accommodate more than 500 people, which is a fairly small allowance for 10k attendees. I realized

with frustration that the more interesting talks were immediately fully booked, with people waiting in line

some 45 minutes before the session time. Because of this, I missed a few important sessions that I ll

hopefully watch online later.

Finally, on a more technical side, I ve learned many things, that instead of grouping by session I ll group

by topic, given how some subjects were mentioned in several talks.

On gitops and CI/CD pipelines

Most of the mentions went to FluxCD and ArgoCD. At

that point there were no doubts that gitops was a mature approach and both flux and argoCD could do an

excellent job. ArgoCD seemed a bit more over-engineered to be a more general purpose CD pipeline, and flux

felt a bit more tailored for simpler gitops setups. I discovered that both have nice web user interfaces that

I wasn t previously familiar with.

However, in two different talks I got the impression that the initial setup of them was simple, but migrating

your current workflow to gitops could result in a bumpy ride. This is, the challenge is not deploying

flux/argo itself, but moving everything into a state that both humans and flux/argo can understand. I also

saw some curious mentions to the config drifts that can happen in some cases, even if the goal of gitops is

precisely for that to never happen. Such mentions were usually accompanied by some hints on how to operate

the situation by hand.